Self Hosted Kubernetes Agent

Self-hosted agents allow you to run env0 deployment workloads on your own Kubernetes cluster.

- Execution is contained within your own servers/infrastructure

- The agent requires an internet connection but no inbound network access.

- Secrets can be stored on your own infrastructure.

Feature AvailabilitySelf-hosted agents are only available to Enterprise level customers. Click here for more details

Requirements

- Kubernetes cluster at version >= 1.24

- Autoscaler

- Persistent Volume/Storage Class(Optional)

- AMD64 or ARM64-based nodes.

- The agent will be installed using a Helm chart.

Cluster InstallationThe Agent can be run on an existing Kubernetes cluster in a dedicated namespace, or you can create a cluster just for the agent.

Use our k8s-modules repository, which contains Terraform code for easier cluster installation. You can use the main provider folder for a complete installation, or a specific module to fulfill only certain requirements.

Autoscaler (recommended, but optional)

- While optional, configuring horizontal auto-scaling will allow your cluster to adapt to the concurrency and deployment requirements based on your env0 usage. Otherwise, your deployment concurrency will be limited by the cluster's capacity. Please also see Job Limits if you wish you to control the maximum concurrent deployment.

- The env0 agent will create a new pod for each deployment you run on env0. Pods are ephemeral and will be destroyed after a single deployment.

- A pod running a single deployment requires at least

cpu: 460mandmemory: 1500Mi, so the cluster nodes must be able to provide this resource request. Limits can be adjusted by providing custom configuration during chart installation. - Minimum node requirements: an instance with at least 2 CPU and 8GiB memory.

For the EKS cluster, you can use this TF example.

Persistent Volume/Storage Class (optional)

- env0 will store the deployment state and working directory on a persistent volume in the cluster.

- Must support Dynamic Provisioning and ReadWriteMany access mode.

- The requested storage space is

300Gi. - The cluster must include a

StorageClassnamedenv0-state-sc. - The Storage Class should be set up with

reclaimPolicy: Retain, to prevent data loss in case the agent needs to be replaced or uninstalled.

We recommend the current implementations for the major cloud providers:

Cloud | Solution |

|---|---|

AWS | For the EKS cluster, you can use this TF example - EFS CSI-Driver/StorageClass |

GCP | |

Azure |

PVC AlternativeBy default, the deployment state and working directory is stored in a PV (Persistent Volume) which is configured on your Kubernetes cluster. Whenever PV creation or management is difficult, or not required, you can use env0-Hosted Encrypted State with

env0StateEncryptionKey.

Sensitive Secrets

- Using secrets stored on the env0 platform is not allowed for self-hosted agents, since self-hosted agents allow you to store secrets on your own infrastructure.

- Customers using self-hosted agents may use their own Kubernetes Secret to store sensitive values - see env0ConfigSecretName below.

- If you are migrating from SaaS to a self-hosted agent, deployments attempting to use these secrets will fail.

- This includes sensitive configuration variables, SSH keys, and Cloud Deployment credentials. The values for these secrets should be replaced with references to your secret store, as detailed in the table below.

- In order to use an external secret store, authentication to the secret store must be configured using a custom Helm values file. The required parameters are detailed below.

- Storing secrets is supported using these secret stores:

Secret store | Secret reference format | Secret Region & Permissions |

|---|---|---|

AWS Secrets Manager (us-east-1) |

| Set by the |

GCP Secrets Manager |

| Your GCP project's default region Access to the secret must be possible using the |

Azure Key Vault |

| Your Azure subscription's default region |

HashiCorp Vault |

| |

OCI Vault Secrets |

| The region defined in the credentials provided in the agent configuration. |

Allow storing secrets in env0Alternatively, you could explicitly allow env0 to store secrets on its platform, by opting-in in your organization's policy - For more info read here

Internal Values

The following secrets are required for the agent components to communicate with env0's backend, they are generated and supplied in your values file.

awsAccessKeyIdEncodedawsSecretAccessKeyEncodedenv0ApiGwKeyEncoded

Custom/Optional Configuration

A Helm values.yml will be provided by env0 with the configuration env0 provides.

The customer will need to provide a values.customer.yml with the following values (optional), to enable specific features:

Keys | Description | Required for feature | Can be provided via a Kubernetes Secret? |

|---|---|---|---|

| Custom Docker image URI and Base64 encoded | Custom Docker image. See Using a custom image in an agent | No |

| A reference to a k8s secret name that holds a Docker pull image token | Custom Docker image that is hosted on a private Docker registry | No |

| Base64 encoded Infracost API key | Cost Estimation | Yes:

|

| Base64 encoded AWS Access Key ID & Secret | AWS Assume role for deploy credentials. Also, see Authenticating the agent on AWS EKS | Yes:

|

| Container resource limits

Read more about resource allocation

Recommended | Custom deployment pod size | No |

| Container resource requests. Recommended | Custom deployment container resources | No |

| An array of | Custom tolerations | No |

| An array of | Custom tolerations | No |

| Allows you to constrain which nodes env0 pods are eligible to be scheduled on. see docs | Custom node affinity | No |

| Affinity for deployment pods. This will override the default | Custom node affinity | No |

| Base64 encoded AWS Access Key ID & Secret. Requires the | Using AWS Secrets Manager to store secrets for the agent | Yes:

|

| Base64 encoded GCP project name and JSON service-key contents. Requires the | Using GCP Secret Manager to store secrets for the agent. These credentials are not used for the deployment itself. If | Yes:

|

| Base64 encoded Azure Credentials. | Using Azure Key Vault Secrets to store secrets for the agent | Yes:

|

| OCI credentials.

All should be defined separately within the same object - Any field that ends with | Using OCI Vault to store secrets for the agent. These credentials are not used for the deployment itself. | Yes:

|

|

| Using HCP Vault to store secrets for the agent | Yes:

|

| Set HCP Vault authentication. First, set the cluster's URL:

Then, you should choose one of the following login method:

| Using HCP Vault to store secrets for the agent | No |

| Base64 Bitbucket server credentials in the format `username:token (using a Personal Access token) | On-premise Bitbucket Server installation | Yes:

|

| Base64 Gitlab Enterprise credentials in the form of a Personal Access token | On-premise Gitlab Enterprise installation | Yes:

|

| In cases where your GitLab instance base url is not at the root of the url, URL but on a separate path, e.g., | On-premise Gitlab Enterprise installation | No |

| GitHub Enterprise Integration (see step 3) | On-premise GitHub Enterprise installation | Yes:

|

| When set, cloning a git repository will only be permitted if the git url matches the regular expression set. | VCS URL Whitelisting | No |

| An array of strings. Each represents a name of Kubernetes secret that contains custom CA certificates. Those certificates will be available during deployments. | Custom CA Certificates. More details here | No |

| When set to | Ignoring SSL/TLS certs for on-premise git servers | No |

| Ability to change the default PVC storage class name for env0 self-hosted agent | the default is Please note: When changing this you should also change your storage class name to match this configuration | No |

| Customize the Kubernetes service account used by the deployment pod. Primarily for pod-level IAM permissions | the default is | No |

| The number of successful and failed deployment jobs should be kept in the Kubernetes cluster history | The default is 10 for each value | No |

| When set to | Increased agent pod security | No |

| A base64 encoded string (password). When set, deployment state and working directory will be encrypted and persisted on Env0's end | Yes:

| |

| A base64 encoded string (password). Used to enable the "Environment Outputs" feature | See notes in Environment Outputs | No |

| Logger config

| No | |

| Set | No | |

| Agent's Proxy pod config:

| No | |

| A number of deployment pods that should be left "warm" (running & idle) and ready for new deployments | No | |

| Additional Environment variables to be passed to the deployment pods, which will also be passed to the deployment process. These are set as a plain yaml object, i.e.:

| No | |

| Additional labels to be set on deployment pods. Those are set as a plain yaml object, i.e.:

| No | |

| Additional annotations to be set on deployment pods. These are set as a plain yaml object, i.e.:

| No | |

| Additional Environment variables to be passed to the agent pods, which will also be passed to the agent proxy / trigger. These are set as a plain yaml object, i.e.:

| No | |

| Additional annotations to be set on agent (trigger/proxy) pods. These are set as a plain yaml object, i.e.:

| No | |

| Additional annotations to be set on agent (trigger/proxy) pods. Those are set plain yaml object i.e:

| No | |

|

| Mount custom secrets | No |

|

| Mount secret files to given a mountPath | No |

| A Kubernetes Secret name. Can be used to provide sensitive values listed in this table, instead of providing them in the Helm values files. | No | |

| Custom role for AWS SSM secret fetching, Note: only used when useOidcForAwsSsm=true | No | |

| When set to | No | |

| Using Internal Proxy for communication with env0 or your self hosted VCS

For enable proxy for

If you want to exclude url from using the proxy use

| No | |

| If set to "true", this agent will throw an error when any user tries to destroy any environment or run a custom "task" in it | No | |

| Additional configurations to be merged with the spec of deployment pods. Can be used to add hostAliases, dnsConfig, etc. Example:

| ||

| Additional configurations to be merged with the spec of agent (trigger/proxy) pods. Can be used to add Example:

|

Base64 Encoding ValuesTo ensure no additional new line characters are being encoded, please use the following command in your terminal:

echo -n $VALUE | base64

Storing Secret Values as Kubernetes SecretSome of the configuration values listed above are sensitive. As an alternative to setting them in your

values.customer.yml, you can provide sensitive keys and values from your own Kubernetes Secret prior to the agent's installation/upgrade.Setting the

env0ConfigSecretNamewill instruct the agent to extract the needed values from the given Kubernetes Secret and will override values from the Helm value files.The Kubernetes Secret must be accessible from the agent, and sensitive values must be Base64 encoded.

Further Configuration

The env0 agent externalizes a wide array of values that may be set to configure the agent.

We do our best to support all common configuration case scenarios, but sometimes a more exotic or pre-released configuration is required.

For such advanced cases, see this reference example of utilizing Kustomize alongside Helm Post Rendering to further customize our chart.

Job Limits

You may wish to add a limit on the number of concurrent runs. To do so, add a Resource Quota to the agent namespace with a parameter on count/jobs.batch.

See here for more details.

Installation

-

Add our Helm Repo

helm repo add env0 https://env0.github.io/self-hosted -

Update Helm Repo

helm repo update -

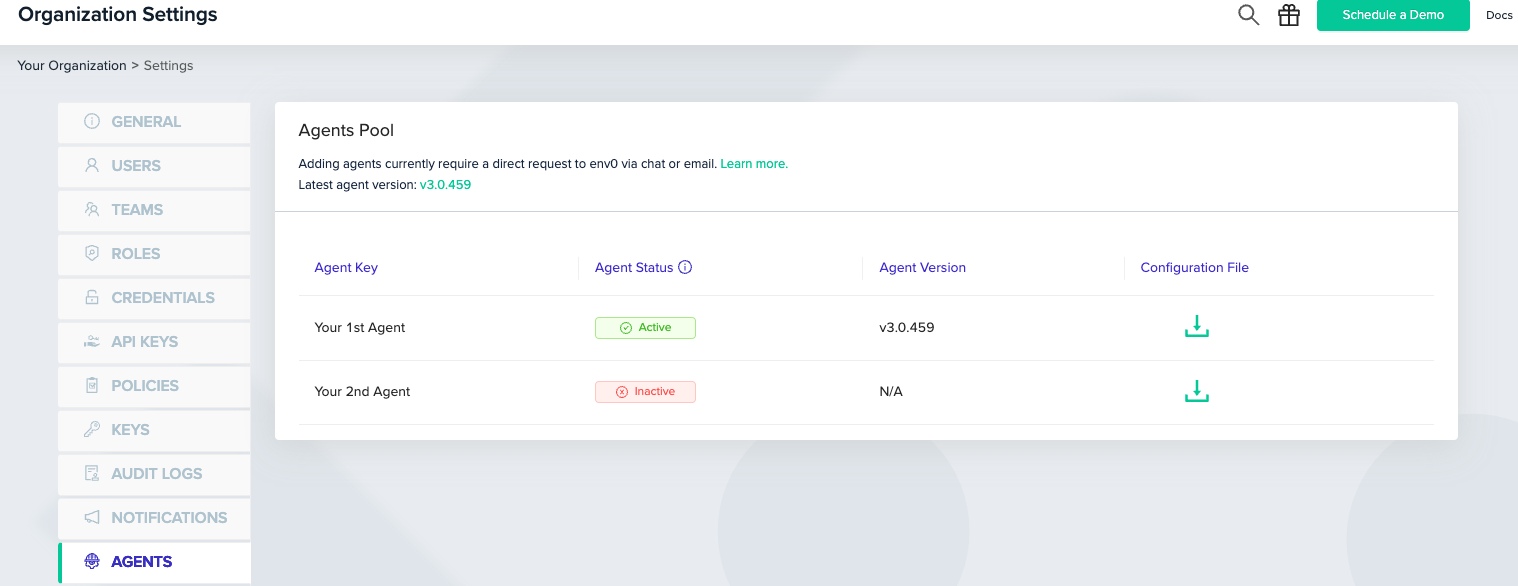

Download the configuration file:

<your_agent_key>_values.yamlfrom Organization Settings -> Agents tab

- Install the Helm Charts

helm install --create-namespace env0-agent env0/env0-agent --namespace env0-agent -f <your_agent_key>_values.yaml -f values.customer.yaml # values.customer.yaml should contain any optional configuration options as detailed above

TF exampleExample for helm install

Helm provider must be greater >= 2.5.0

Installing from sourceIf you decide not to install the helm chart from our helm repo, and you want to install using the source code (for example by using git clone) you might need to run:

helm dependency build <path-to-the-source-code>

Upgrade

helm upgrade env0-agent env0/env0-agent --namespace env0-agent

Upgrade ChangesPreviously, you would have had to download the values.yaml file. This is no longer required for an upgrade. However, we do recommend keeping the version of the values.yaml file you used to install the agent with, in case a rollback is required during the upgrade progress.

Custom Agent Docker ImageIf you extended the docker image on the agent, you should update the agent version in your custom image as well.

Verify Installation/Upgrade

After installing a new version of the env0 agent helm chart, it is highly recommended to verify the installation by running:

helm test env0-agent --namespace env0-agent --logs --timeout 1mUsing the helm template command

helm template commandAlternatively to using helm to install the agent directly, you could use helm template in order to generate the K8S yaml files for you. Then you'd be able to run these files with a different K8S pipeline, like running kubectl apply or using ArgoCD.

In order to generate the yaml files using helm template, you should first add the env0 helm chart

helm repo add env0 https://env0.github.io/self-hosted

helm repo updateThen, run the following command.

If your Kubernetes cluster is version 1.21 and up:

helm template env0-agent env0/env0-agent --kube-version=<KUBERNETES_VERSION> --api-version=batch/v1/CronJob -n <MY_NAMESPACE> -f values.yamlIf your Kubernetes cluster version is less than 1.21:

helm template env0-agent env0/env0-agent --kube-version=<KUBERNETES_VERSION> -n <MY_NAMESPACE> -f values.yaml<KUBERNETES_VERSION>is the version of your kubernetes cluster<MY_NAMESPACE>is the k8s namespace in which the agent will be installed- values.yaml is the values file downloaded from env0's Organization Settings -> Agents tab. You can also add your own custom values into said file.

Usingenv0ConfigSecretNamewith thehelm templatecommandIf using

helm template, the feature that checks the Kubernetes secret defined by the env0ConfigSecretName Helm value to determine whether the PVC should be created will not function. This feature relies on an active connection to the cluster

Outbound Domains

The agent needs the following outbound domains access:

| Wildcard | Used by |

|---|---|

| *.env0.com, *.amazonaws.com | env0 SaaS platform, the agent needs to communicate with the SaaS platform |

| ghcr.io | GitHub Docker registry which holds the Docker container of the agent |

| *.hashicorp.com | Downloading Terraform binaries |

| registry.terraform.io | Downloading public modules from the Terraform Registry |

| registry.opentofu.org | Downloading public modules from the OpenTofu Registry |

| github.com, gitlab.com, bitbucket.org | Git VCS providers ( ports 22, 9418, 80, 443 ) |

| api.github.com | Terragrunt installation |

| *.infracost.io | Cost estimation by Infracost |

| openpolicyagent.org | Installing Open Policy Agent, used for Approval Policies |

- Make sure to allow access to your cloud providers, VCS domains, and any other tool that creates an outbound request.

Firewall RulesNote that if your cluster is behind a managed firewall, you might need to whitelist the Cluster's API server's FQDN and corresponding Public IP.

Updated 4 days ago

For more advanced use cases for the self hosted agent see: