Standalone Docker Agent

Running deployments on a standalone docker

Choosing the docker agent is the quickest way to start running the self-hosted agent.

Running a deployment-agent

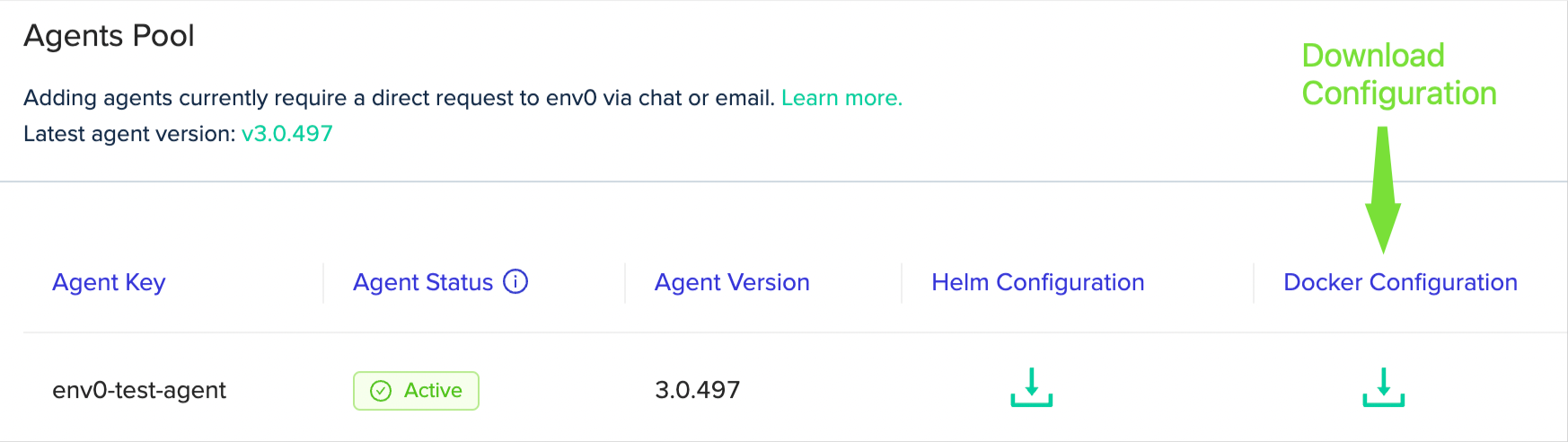

- Download the Docker Configuration(

env0.envfile) from the Agents tab under Organization > Settings.

- Create your state encryption key with the format of base64 encoding. (base64 encoding of any string you choose) and add it in

env0.envas a new environment variable:

ENV0_STATE_ENCRYPTION_KEY=<your-base64-encryption-key>

ℹ️ This value will be used to encrypt and decrypt the environment state and working directory

ℹ️ Read more about the state encryption key here - Run agent:

docker run --env-file ~/path/to/env0.env ghcr.io/env0/deployment-agent:latest

And that's it!

The agent should be marked as Active and be ready to handle deployments.

Enabling Multi-ConcurrencyDo you have multiple deployments waiting to run and you don't want to them to wait?

Each container is designed to handle a single deployment at a time.

To run multiple deployments in parallel, just create another deployment agent using the

docker run command. You can run any number of containers to satisfy your deployment needs.

Cloud Credential Configurations

These are optional configurations, and typically role authentication will be handled by native container service mechanisms. See note below regarding exposing Environment Variables for native authentication.

Keys | Description |

|---|---|

| plaintext, access key and secret key user role that is used to assume the deployment credentials specified in the env0 project configurations. |

For GCP, and Azure you can embed the Environment Variables for GCP and Azure and use ADDITIONAL_ENV_VARS to expose them to the runner environment.

Secrets

These are optional configurations, and typically will be handled by native authentication mechanisms in AWS, GCP, Azure if you're using the managed container services like ECS.

Keys | |

|---|---|

| plaintext, access key & secret key user role that has the following permission: |

| When enabled, the agent will authenticate to AWS SSM using env0 OIDC |

| Custom role for AWS SSM secret fetching, Note: only used when |

| plaintext, using HashiCorp Vault to store secrets |

| plaintext, Google service account credentials to fetch GCP secrets requires |

| plaintext, using Azure Key Vault Secrets to store secrets for the agent |

Cost Estimation

| Key | Description |

|---|---|

INFRACOST_API_KEY | Infracost API Key, create a personal / organizational key at infracost.io |

Running a vcs-agent (for on-prem / self-hosted VCS)

In the case that you are using a self-hosted version control such as Bitbucket Server, GitLab Enterprise, GitHub Enterprise, you will need to run the vcs-agent.

It has to run in parallel with the deployment-agent in order to interact with private VCS.

- Add credentials to the relevant VCS in

env0.envfile (see variables table below) as plaintext - Run docker:

docker run --env-file ~/path/to/env0.env ghcr.io/env0/vcs-agent:latest

ℹ️ env0 has a public docker registry on GitHub which is maintained here.

Keys | Description | Required for feature |

|---|---|---|

| Bitbucket server credentials in the format | On-premise Bitbucket Server installation. |

| Gitlab Enterprise credentials in the form of a Personal Access token. | On-premise Gitlab Enterprise installation |

| In cases where your GitLab instance base url is not at the root of the url, and in a separate path, e.g | On-premise Gitlab Enterprise installation |

| Github Enterprise Integration (see step 3) | On-premise GitHub Enterprise installation |

Exposing Custom Environment Variables

By default, env0's runner will not expose all the environment variables defined in the container. This is to help ensure some safety, and isolating the container environment from the runner's environment. However, in certain scenarios, you may still want to expose environment variables defined in the container. For example, if you're running in AWS ECS, and you want to use the cluster role for authorization purposes.

Key | Description |

|---|---|

|

|

AWS ECS

When defining a role for the container, AWS sets an environment AWS_CONTAINER_CREDENTIALS_RELATIVE_URI this variable should be added to the ADDITIONAL_ENV_VARS in order to be exposed to the runner environment.

For example, ADDITIONAL_ENV_VARS=["AWS_CONTAINER_CREDENTIALS_RELATIVE_URI"]

Updated 13 days ago